In this article I will explain how you can use PowerShell to extract the right objects and merge those objects, and how to use Visual Studio Code to resolve most of the conflicts in the merged objects.

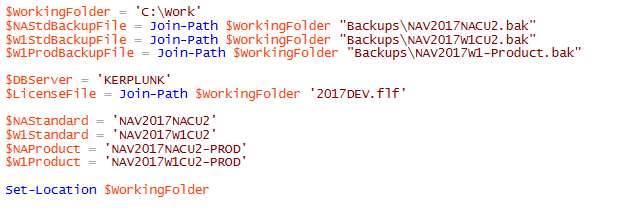

In a previous article, I explained how you can use PowerShell to create the environments. The script in this article actually use the variables that are assigned in the other one. What I should really do is create a configuration file and load that from both scripts. I wanted to share what I have so far though, without a handy configuration file but hopefully helpful nonetheless.

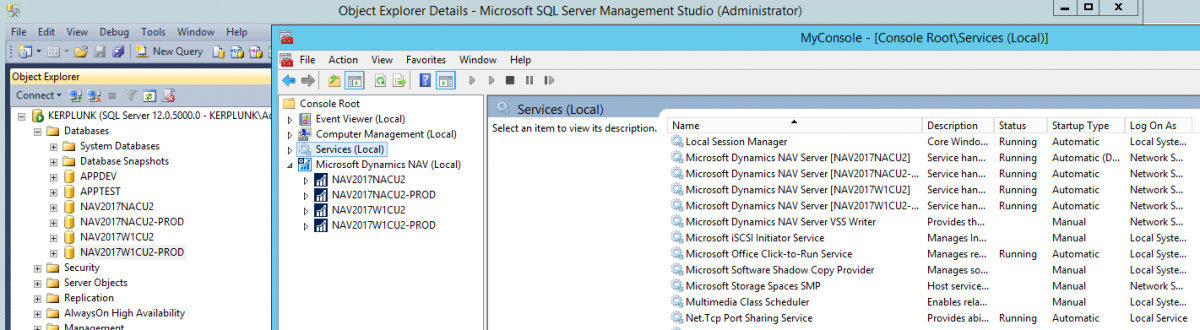

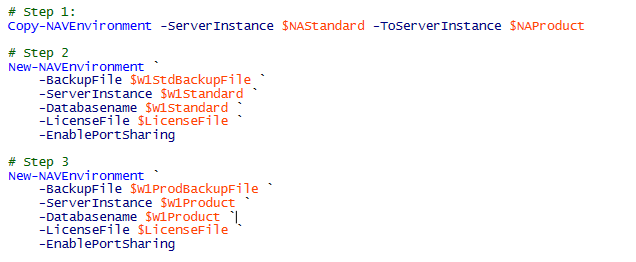

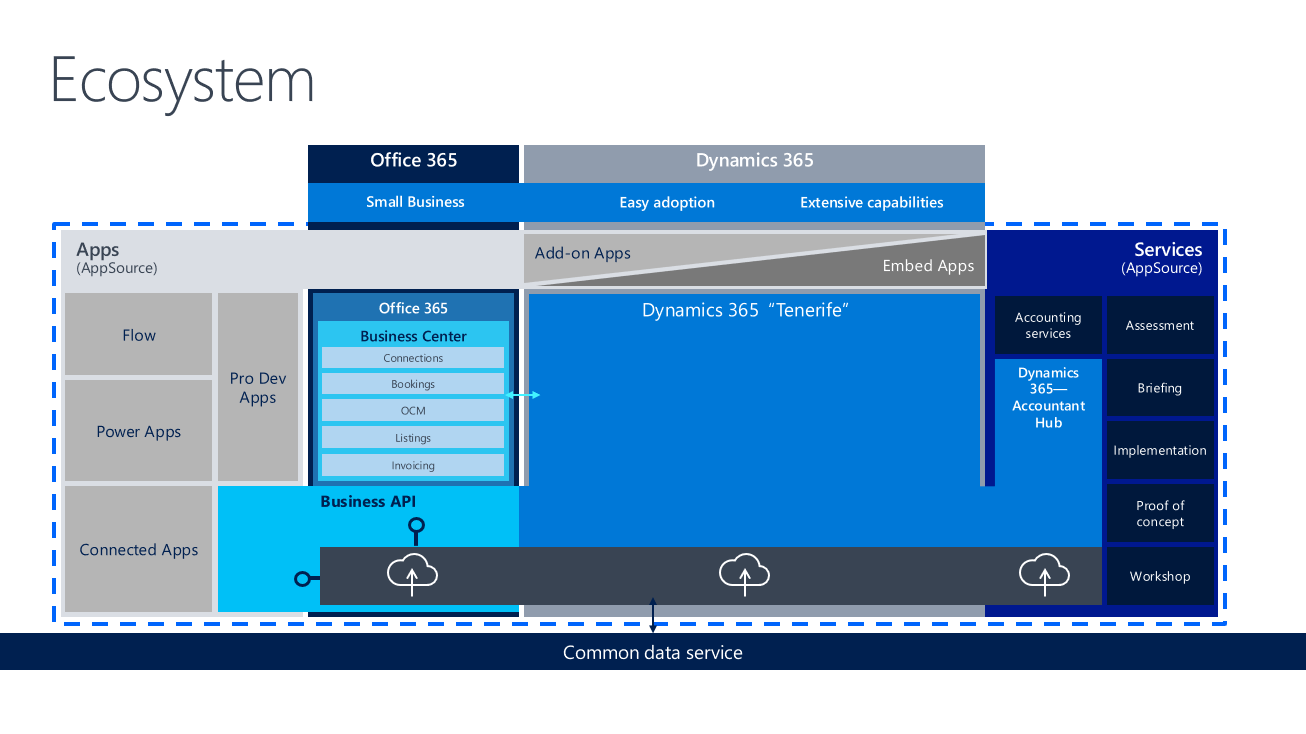

We start off with the 4 environments that were created in our last article: ORIGINAL, MODIFIED, TARGET, and RESULT. The first two environments contain the standard W1 and the ISV product. Target and RESULT are identical at this point, and they both contain the standard NA localization. All environments are in the same build number (NAV 2017 CU2 in this case). Because I have a developer license that has insert rights in the ISV number range, my strategy is to merge the product modifications into the NA database. If you do not have those insert rights, then a better strategy would be to merge the NA localization into the product environment instead. Microsoft has recently added insert capabilities for the standard object ranges to regular developer licenses, for this particular purpose. As I was working through the conflict objects of this assignment, I was thinking that this may even be the best default strategy anyway.

Due to a limited amount of time, and a limited amount of PowerShell skill, I decided to approach this task pragmatically:

- Manually move the ISV specific objects to the RESULT environment

- Use PowerShell to export all the objects

- Manually eliminate the unmodified objects

- NOTE: as I learned later, Waldo’s PowerShell modules actually have logic to remove these after the merge, so this can be scripted as well

- Use PowerShell to merge the objects

- Use Visual Studio Code (AKA VSCode) to resolve the conflicts as much as possible

- Use PowerShell to join the objects

- Use C/SIDE to resolve any remaining conflicts

The conflict resolution is something that has to be done manually. Everything else can be scripted in PowerShell. During this process I’ve asked Waldo for some help, and he explained that most of what I am doing here is already part of the merge sample scripts. In the Cloud Ready Software PowerShell module, there is a folder called “PSScripts”, and in that folder you will find a large number of scripts that you can use as an example to get started on your task. As you gain experience in using PowerShell, you will recognize a lot of useful features in those scripts, and you can modify them to your specific needs.

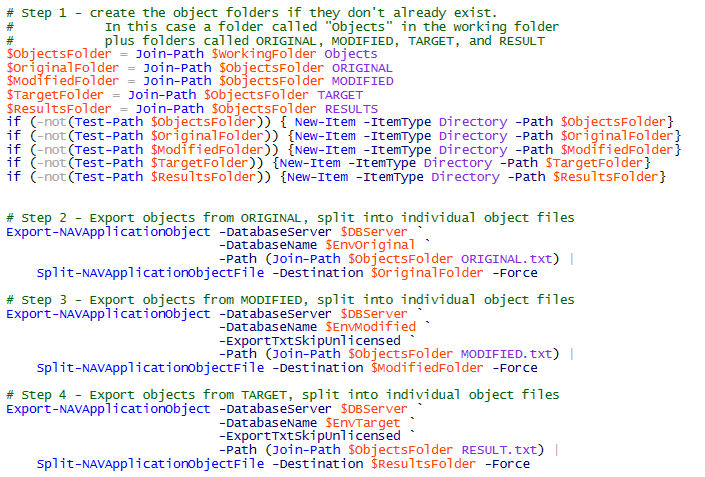

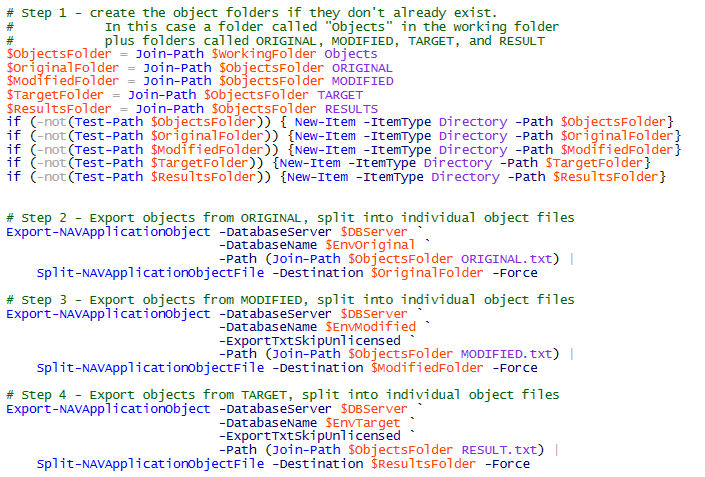

Alright, on to the details. The first part is to use PowerShell to extract the objects into their own folders. I also added commands to create the folder structure itself:

At this point, you will have an “Objects” folder in your working folder, with full object files for ORIGINAL, MODIFIED, and TARGET. Those object files have then been split into individual object files in the ORIGINAL, MODIFIED, and TARGET folders. Before using PowerShell to merge those objects, I used a text compare tool (I like Scooter Software’s Beyond Compare) to eliminate unmodified objects. Remember, I only want to work on the modified objects, so I don’t have to worry about getting confused by objects that were not changed for the ISV product.

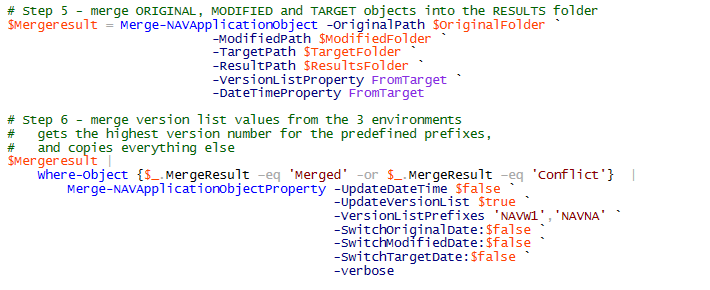

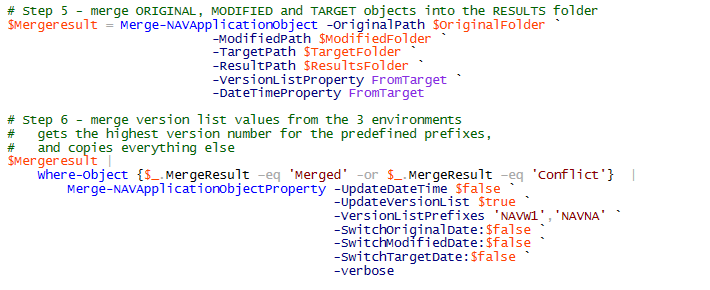

Now that we only have modified objects in all three object folders, we’ll use the merge Cmdlet to do the actual merging of the objects.

If you notice, the output of the “Merge-NAVApplicationObject” Cmdlet is loaded into the variable “$MergeResult”. This object is then piped into the “Merge-NAVApplicationObjectProperty” Cmdlet. The first Cmdlet is a standard NAV PowerShell Cmdlet, and the second one comes with the Cloud Ready Software modules. The standard merge Cmdlet does not merge the Version List property, it simply takes the Version List from one of the three environments. We can have a discussion about whether the Version List is even important anymore, especially if you use Source Code Management. The reality is that most NAV developers depend on proper tags in the Version List, so it is useful to merge those as well. All you need to do is specify the prefixes that you want to merge (In my case NAVW1 and NAVNA) and it will find the highest value for those prefixes. All other prefixes will be simply copied into the RESULT objects.

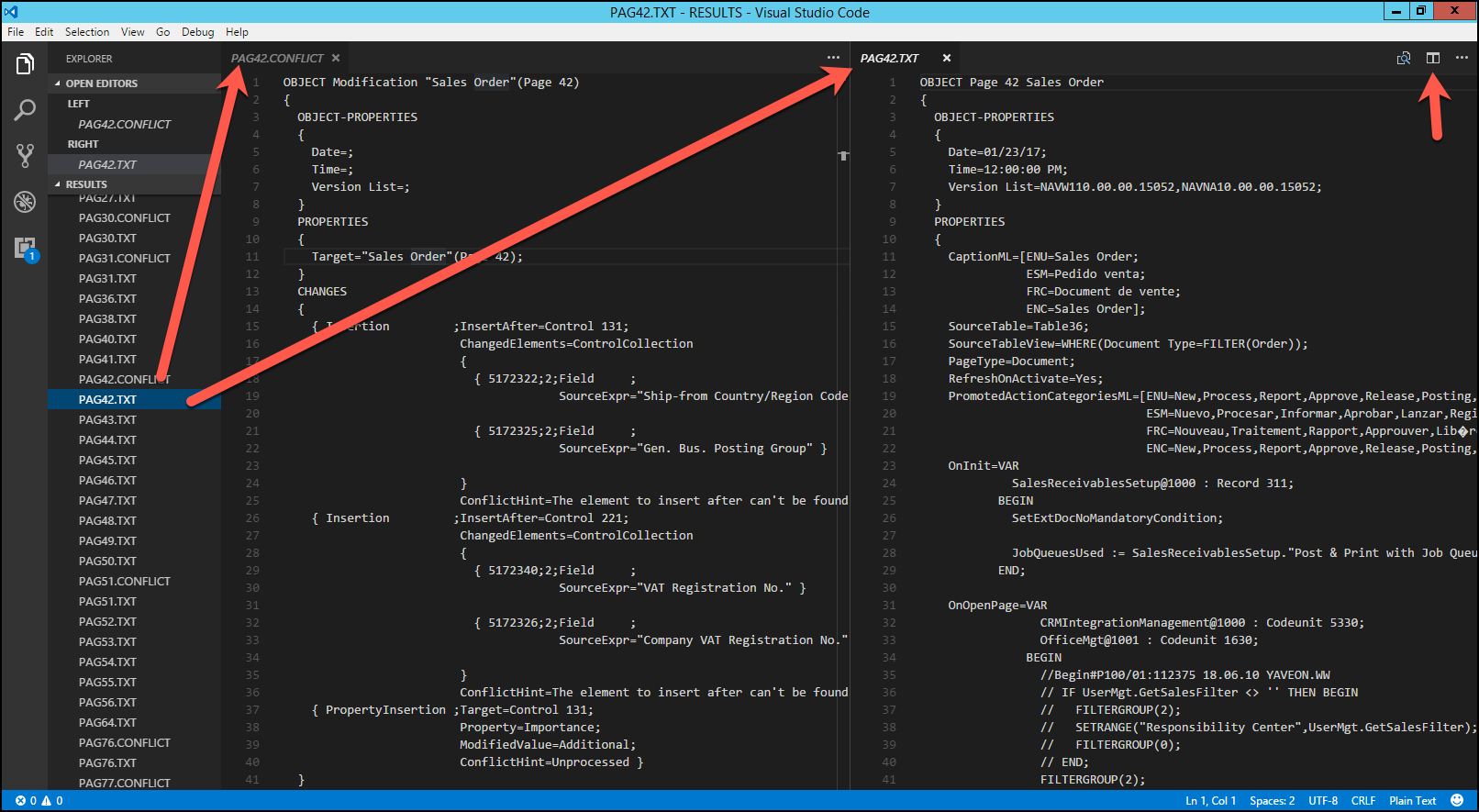

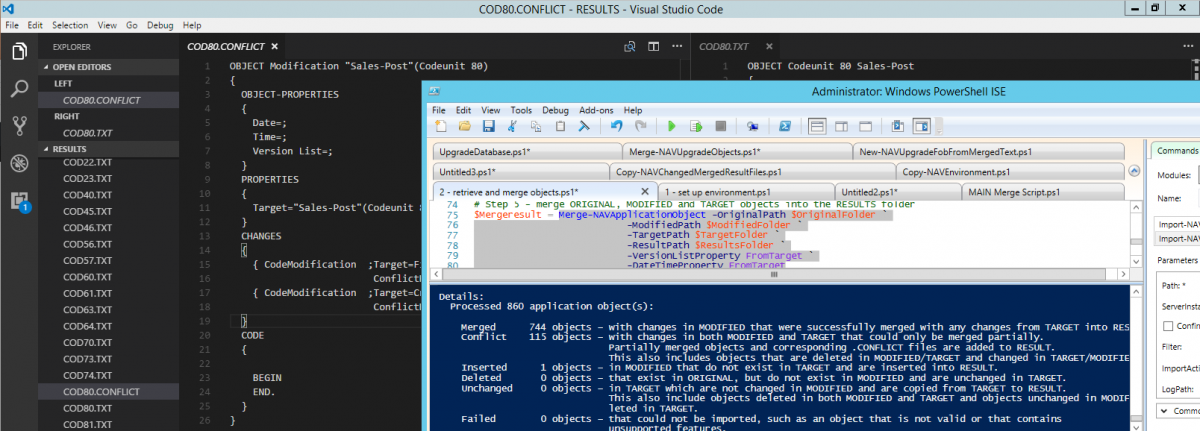

At this point, we have all merged files in the RESULT folder. This includes the objects that were merged successfully, but also the objects that the merge Cmdlet could not resolve, for instance when code was added at the same position in MODIFIED and in TARGET. Since we are going to have to learn how to use VSCode, I decided to use that to resolve the conflicts.

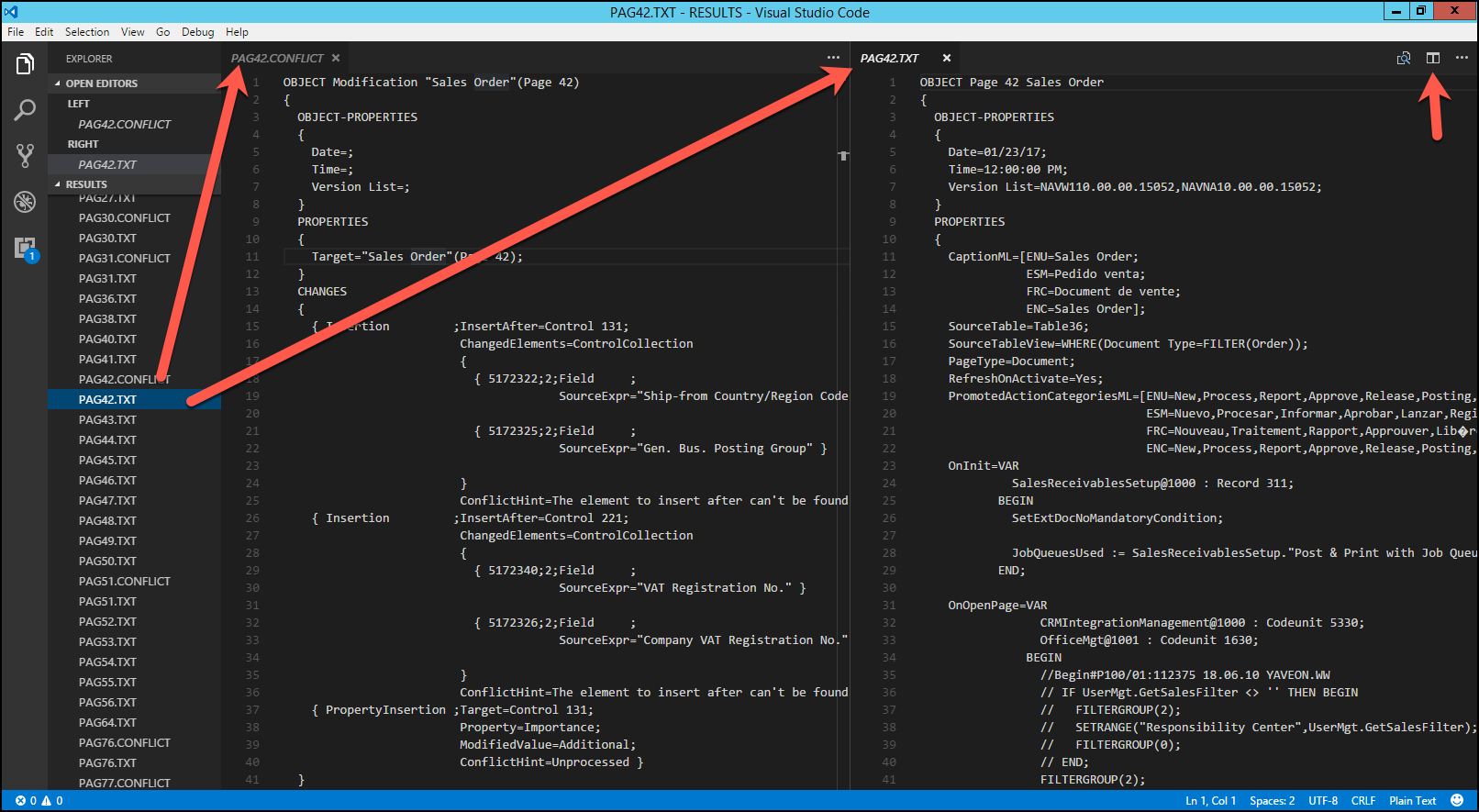

With VSCode installed, you can open the RESULT folder and see the content of this folder inside the file browser at the left hand side. In the upper right hand corner is a button that you can use to split the screen into two (and even three) editor windows. This is very useful for resolving merge conflicts, because you can open the conflict file in one side, and the object file in the other side. You are editing the actual object file here, so you may want to take a backup copy of the folder before you get started.

With VSCode installed, you can open the RESULT folder and see the content of this folder inside the file browser at the left hand side. In the upper right hand corner is a button that you can use to split the screen into two (and even three) editor windows. This is very useful for resolving merge conflicts, because you can open the conflict file in one side, and the object file in the other side. You are editing the actual object file here, so you may want to take a backup copy of the folder before you get started.

Now what you must understand is that the conflict files only contains the pieces that the merge Cmdlet could not figure out. In a total of 860 objects, with 6956 individual changes, it was able to merge 96.4% of those changes. An object that may have 4 conflicts can also have a ton of other changes that the merge Cmdlet merged successfully. All YOU have to do is focus on the ones that need manual attention. For instance, codeunits 80 and 90 had a TON of modifications, but it only needed help with 3 of them.

I had a total of 115 conflict files, and I could completely resolve 112 of them in VSCode. I made a note of the few that remained, and decided to import those unchanged into C/SIDE, so I can finish those off in the proper IDE.

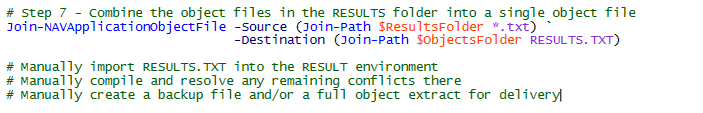

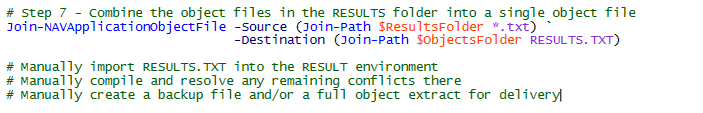

The last PowerShell command that I used is to create a single object file, which can then be imported into C/SIDE. Then I finished the last few remaining object files, and was able to compile all objects from there.

I’ve done many of these merges completely manually. To say that I was skeptical that PowerShell would do a good job is putting it mildly. I flat out did not trust the merge Cmdlet, surely they could not do as good a job as I could do. I was wrong. I checked a bunch of objects to see if I could find any mistakes and I could not find any. Not only did the merge Cmdlet do a fine job at merging the objects, it did so in about 5 minutes flat.

You can download the scripts here.

Instead of having to manually merge almost 900 objects, all I had to do was focus on the conflicts. Usually, a vertical merge like this would take me anywhere from 2 to 4 weeks, and I was able to finish this one in less than two days. Figuring out the PowerShell scripts took me much longer, but I will be able to use those for the next merge task.

With VSCode installed, you can open the RESULT folder and see the content of this folder inside the file browser at the left hand side. In the upper right hand corner is a button that you can use to split the screen into two (and even three) editor windows. This is very useful for resolving merge conflicts, because you can open the conflict file in one side, and the object file in the other side. You are editing the actual object file here, so you may want to take a backup copy of the folder before you get started.

With VSCode installed, you can open the RESULT folder and see the content of this folder inside the file browser at the left hand side. In the upper right hand corner is a button that you can use to split the screen into two (and even three) editor windows. This is very useful for resolving merge conflicts, because you can open the conflict file in one side, and the object file in the other side. You are editing the actual object file here, so you may want to take a backup copy of the folder before you get started.