One of my clients had asked me to add Document Attachments to Bank Deposits. Thinking this was a quick and easy one I added the factbox, set the link, and went on with my day. When my client said they see all attachments for all records, I realized it is a little more involved. It took some time to figure out how it actually works, and this post explains the whole thing, including how to get document attachments through the posting process.

How Does it Really Work?

The reason why it’s not so simple is because the Document Attachment works with RecRef instead of a hardcoded table relationship. Take a look at the Document Attachment table (table number 1173 in the base app) for the field definitions. I’ll focus on single field PK records in this post, so tables where a single Code20 field has the unique identifier of the record. The more complex compound PK works the same, you just need to set more fields.

Standard BC has a limited number of tables that have document attachment capabilities. If you want to add another table, whether a custom table or another standard table, you will need to subscribe to some events to make that work. Let’s first look at how standard document attachments work.

Open Document Attachments

Let’s take a look at the Customer Card page in standard BC. The “Attachments” action has the following OnAction trigger code:

trigger OnAction()

var

DocumentAttachmentDetails: Page "Document Attachment Details";

RecRef: RecordRef;

begin

RecRef.GetTable(Rec);

DocumentAttachmentDetails.OpenForRecRef(RecRef);

DocumentAttachmentDetails.RunModal();

end;

The “Document Attachment Details” page is where the magic happens. If you drill down into the ‘OpenForRecRef’ function, you will see that it takes a RecRef variable (which in this example is looking at the current Customer record) and sets filter on the table ID of the RecRef and the value of the PK field of the record itself. This is the function that defines all the tables in the standard BC app that have Document Attachments.

The important thing in this function is the call to OnAfterOpenForRecRef, which is an event publisher that we will subscribe to later. This event gives you the capability to set a filter to any table. Note that this is just a filter on a page. All that this does is make sure that you only see the document attachments for this particular record.

The Document Attachments Factbox

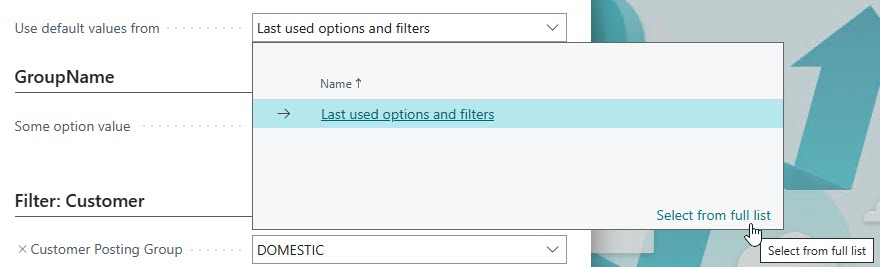

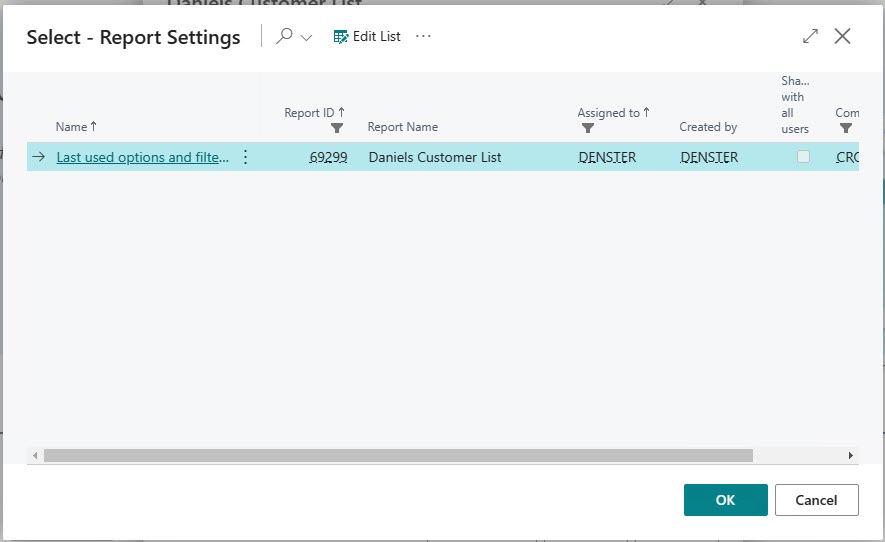

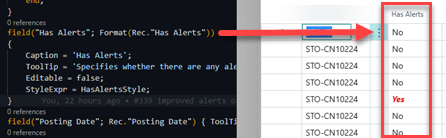

Another way to give the user access to the document attachments is the “Document Attachment Factbox” that you can see in the factboxes area. In the standard app on the Customer Card, the SubPageLink property links the “Table ID” field to a hardcoded value 18 (which is Customer table’s object id). If you want to create your own link, you should use the table name instead of its number. So we will be linking to the “Bank Deposit Header” table, so our constant value will be Database::”Bank Deposit Header”.

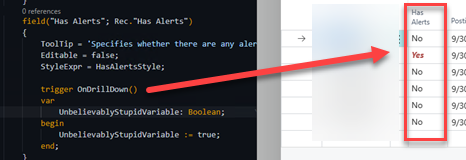

Take a look at the OnDrillDown trigger of the NumberOfRecords field in the factbox. It first sets up the RecRef, which is again hardcoded for the standard range of tables that have document attachments. Then it executes the same logic on the Document Attachment Details page as the action mentioned above.

Note the OnBeforeDrillDown function call in the ‘else’ leg of the ‘case’ statement. This is the event that you need to subscribe to in order to properly filter the document attachments for non-standard tables.

What is important to understand about this particular factbox is that records are not entered directly into the factbox. You have to click an action that adds the record, and as a result the “No.” field in the factbox is NOT populated by the page link.

Creating New Document Attachments

So far we’ve only looked at how to display the proper document attachments to the user. What’s left is how BC actually stores these records. The part that is tricky is not about getting the file itself, but how BC gets the value from the RecRef. The function that stores the values from the RecRef into the document attachment is called InitFieldsFromRecRef. You can see that this function again goes through all of the same hard coded standard BC tables that we’ve seen before, and it provides an event publisher called OnAfterInitFieldsFromRecRef that you can use for additional tables.

Document Attachments for Additional Tables

Alright, now let’s put it all together for a new table. I recently added this for Bank Deposits for a client of mine, so I’ll use the same table to illustrate. I’ll focus on the new ‘Bank Deposit Header’ and its Posted sibling. The new implementation of bank deposits can be found in the “_Exclude_Bank Deposits” app that is part of standard BC.

To get started, create a page extension for the Bank Deposit and the Posted Bank Deposit pages, and add the Document Attachment factbox. You can copy this from the Customer Card and change the links appropriately. Note that the records that you will see when you drill down into the details are filtered on the table but not by the PK value of the record that you are looking at. In other words, just adding this factbox will only give you a list of all the Document Attachments that are linked to ALL (Posted) Bank Deposits.

Finally, create a new codeunit, I called mine ‘DocAttachmentSubs’.

Filter the Details

First, we need to make sure that the “Document Attachment Details” page is filtered on the correct Bank Deposit number. For this we subscribe to the OnAfterOpenForRecRef event.

[EventSubscriber(ObjectType::Page, Page::"Document Attachment Details", 'OnAfterOpenForRecRef', '', true, true)]

local procedure DocAttDetailsPageOnAfterOpenForRecRef(var DocumentAttachment: Record "Document Attachment"; var RecRef: RecordRef)

var

MyFieldRef: FieldRef;

RecNo: Code[20];

begin

if RecRef.Number in [Database::"Bank Deposit Header", Database::"Posted Bank Deposit Header"] then begin

MyFieldRef := RecRef.Field(1); // field 1 is the "No." field in both tables

RecNo := MyFieldRef.Value();

DocumentAttachment.SetRange("No.", RecNo);

end;

end;

Filter the Factbox

Next, we need to make sure that the details are filtered properly when the user clicks the DrillDown from the document attachment factbox. For this we subscribe to the OnBeforeDrillDown event in the factbox.

[EventSubscriber(ObjectType::Page, Page::"Document Attachment Factbox", 'OnBeforeDrillDown', '', true, true)]

local procedure DocAttFactboxOnBeforeDrillDown(DocumentAttachment: Record "Document Attachment"; var RecRef: RecordRef)

var

BankDepositHeader: Record "Bank Deposit Header";

PostedBankDepositHeader: Record "Posted Bank Deposit Header";

begin

case DocumentAttachment."Table ID" of

Database::"Bank Deposit Header":

begin

RecRef.Open(Database::"Bank Deposit Header");

if BankDepositHeader.Get(DocumentAttachment."No.") then

RecRef.GetTable(BankDepositHeader);

end;

Database::"Posted Bank Deposit Header":

begin

RecRef.Open(Database::"Posted Bank Deposit Header");

if PostedBankDepositHeader.Get(DocumentAttachment."No.") then

RecRef.GetTable(PostedBankDepositHeader);

end;

end;

end;

So now when the user clicks the drilldown, BC will set the RecRef to look at the (Posted) Bank Deposit, which is then sent into the details page, which then knows how to properly filter.

Set the Right Link

The last thing we need is to make sure that new document attachments have the (Posted) Bank Deposit number, by subscribing to the OnAfterInitFieldsFromRecRef event of the Document Attachment table itself.

[EventSubscriber(ObjectType::Table, Database::"Document Attachment", 'OnAfterInitFieldsFromRecRef', '', true, true)]

local procedure DocAttTableOnAfterInitFieldsFromRecRef(var DocumentAttachment: Record "Document Attachment"; var RecRef: RecordRef)

var

MyFieldRef: FieldRef;

RecNo: Code[20];

begin

if RecRef.Number in [Database::"Bank Deposit Header", Database::"Posted Bank Deposit Header"] then begin

MyFieldRef := RecRef.Field(1); // field 1 is the "No." field in both tables

RecNo := MyFieldRef.Value();

DocumentAttachment.Validate("No.", RecNo);

end;

end;

You should now be able to create new document attachments for unposted and posted bank deposits. All that’s left is to get document attachments to flow through the posting process.

Posting

Document Attachments are not intrinsically difficult. In the end they are just records in the database. The records are identified by their Table ID and their PK values. For Bank Deposits the PK is a single Code20 field, and there are two versions of the table. All we need to do is write a little loopyloopy that reads the records for the unposted record, copy it into the posted record, and get rid of the old ones. Would be cool to just change the records, but since both the Table ID and the record identifier are all part of the PK, you can’t do a ‘Rename’ because then you’d have to sit there and click confirmations all day long.

For Bank Deposits, you can use the OnAfterBankDepositPost event in the “Bank Deposit-Post” codeunit.

[EventSubscriber(ObjectType::Codeunit, Codeunit::"Bank Deposit-Post", 'OnAfterBankDepositPost', '', true, true)]

local procedure BankDepositPostOnAfterBankDepositPost(BankDepositHeader: Record "Bank Deposit Header"; var PostedBankDepositHeader: Record "Posted Bank Deposit Header")

begin

MoveAttachmentsToPostedDeposit(Database::"Bank Deposit Header", BankDepositHeader."No.",

Database::"Posted Bank Deposit Header", PostedBankDepositHeader."No.");

end;

local procedure MoveAttachmentsToPostedDeposit(FromTableId: Integer; FromNo: Code[20]; ToTableId: Integer; ToNo: Code[20])

var

FromDocumentAttachment: Record "Document Attachment";

ToDocumentAttachment: Record "Document Attachment";

begin

FromDocumentAttachment.SetRange("Table ID", FromTableId);

FromDocumentAttachment.SetRange("No.", FromNo);

if FromDocumentAttachment.FindSet() then begin

repeat

Clear(ToDocumentAttachment);

ToDocumentAttachment.Init();

ToDocumentAttachment.TransferFields(FromDocumentAttachment);

ToDocumentAttachment.Validate("Table ID", ToTableId);

ToDocumentAttachment.Validate("No.", ToNo);

ToDocumentAttachment.Insert(true);

until FromDocumentAttachment.Next() = 0;

FromDocumentAttachment.DeleteAll();

end;

end;

I have this in a separate function because I also had to make it work for the old Deposit implementation. You could totally combine this into a single event subscriber.

I’m thinking about creating a video about this topic. Let me know if this was useful in the comments and if you’d like to see the video.