One of the standard ‘Problems’ when you’re in an AL workspace in VSCode is a warning that you are no longer allowed to use BLOB as a datatype for images. This has been at the bottom of my priorities list until I had a request to create a new image for a standard field. With this post I’ll show you how easy it is.

Media Field

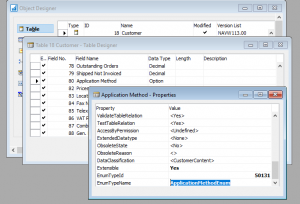

The first element that you need is a field in the table. Instead of a BLOB field with subtype Bitmap, you now need a field of type ‘Media’. There is also a data type called ‘MediaSet’ but that’s not what we are going to use. Go to Docs to read about the difference between Media and MediaSets. The field is not editable directly because we will be importing the image through a function.

In addition to the field itself, you need a function to import an image file into the field. In the object below I have a simple table called ‘Book’ with a number, a title and a cover. We use the ImportCover function to do the import, and implement that as an internal procedure, so it can only be used internal to the app. You can of course set the scope as you see fit.

table 50100 BookDnStr

{

Caption = 'Book';

DataClassification = CustomerContent;

fields

{

field(10; "No."; Code[20])

{

Caption = 'No.';

DataClassification = CustomerContent;

}

field(20; "Title"; Text[100])

{

Caption = 'Title';

DataClassification = CustomerContent;

}

field(30; Cover; Media)

{

Caption = 'Cover';

Editable = false;

}

}

keys

{

key(PK; "No.")

{

Clustered = true;

}

}

internal procedure ImportCover()

var

CoverInStream: InStream;

FileName: Text;

ReplaceCoverQst: Label 'The existing Cover will be replaced. Do you want to continue?';

begin

Rec.TestField("No.");

if Rec.Cover.HasValue then

if not Confirm(ReplaceCoverQst, true) then exit;

if UploadIntoStream('Import', '', 'All Files (*.*)|*.*', FileName, CoverInStream) then begin

Rec.Cover.ImportStream(CoverInStream, FileName);

Rec.Modify(true);

end;

end;

}

Factbox for the image

Similar to how Item images have been implemented, you can create a factbox to show the book cover and add that to the Book Card. Using a factbox also makes it easy to keep the related actions close to the control.

page 50100 BookCoverDnStr

{

Caption = 'Book Cover';

DeleteAllowed = false;

InsertAllowed = false;

LinksAllowed = false;

PageType = CardPart;

SourceTable = BookDnStr;

layout

{

area(content)

{

field(Cover; Rec.Cover)

{

ApplicationArea = All;

ShowCaption = false;

ToolTip = 'Specifies the cover art for the current book';

}

}

}

actions

{

area(processing)

{

action(ImportCoverDnStr)

{

ApplicationArea = All;

Caption = 'Import';

Image = Import;

ToolTip = 'Import a picture file for the Book''s cover art.';

trigger OnAction()

begin

Rec.ImportCover();

end;

}

action(DeleteCoverDnStr)

{

ApplicationArea = All;

Caption = 'Delete';

Enabled = DeleteEnabled;

Image = Delete;

ToolTip = 'Delete the cover.';

trigger OnAction()

begin

if not Confirm(DeleteImageQst) then

exit;

Clear(Rec.Cover);

Rec.Modify(true);

end;

}

}

}

trigger OnAfterGetCurrRecord()

begin

SetEditableOnPictureActions();

end;

var

DeleteImageQst: Label 'Are you sure you want to delete the cover art?';

DeleteEnabled: Boolean;

local procedure SetEditableOnPictureActions()

begin

DeleteEnabled := Rec.Cover.HasValue;

end;

}

Add to the Page

All that is left is to add the factbox to the page where you have the import action. In this case I have a very simple Card page for the book, and the factbox is show to the side.

page 50101 BookCardDnStr

{

Caption = 'Book Card';

PageType = Card;

ApplicationArea = All;

UsageCategory = Administration;

SourceTable = BookDnStr;

layout

{

area(Content)

{

group(General)

{

field("No."; Rec."No.")

{

ToolTip = 'Specifies the value of the No. field.';

ApplicationArea = All;

}

field(Title; Rec.Title)

{

ToolTip = 'Specifies the value of the Title field.';

ApplicationArea = All;

}

}

}

area(FactBoxes)

{

part(BookCover; BookCoverDnStr)

{

ApplicationArea = All;

SubPageLink = "No." = field("No.");

}

}

}

actions

{

area(Processing)

{

group(Book)

{

action(ImportCover)

{

Caption = 'Import Cover Art';

ApplicationArea = All;

ToolTip = 'Executes the Import Cover action';

Image = Import;

Promoted = true;

PromotedCategory = Process;

PromotedOnly = true;

trigger OnAction()

begin

Rec.ImportCover();

end;

}

}

}

}

}

This was a fun one to figure out. Let me know in the comments if it was useful to you