For a while I wasn’t sure if I should write about this. I’m still not sure if it’s a good idea to share something this personal. Take a look at that picture, it is me playing golf with a friend in Tucson, AZ on Saturday July 23, 2016. I just striped a perfect drive right down the center of the fairway (all of 225 yards of it, I’m not very long). After this round of golf we’d meet up with our wives for lunch, we’d go see the new Star Wars movie, and we’d spend the rest of the day having a good time all around. Little did I know that my life is about to take a decidedly dark turn.

As the evening progressed, I gradually started feeling worse and worse. My heart started racing, and pain was starting to grow in my chest, neck and arms. On our way back to our hotel, I could not get my heart under control, and I started to panic. My wife decided that something was very wrong and she took me to the ER. To make a very long story short: I was having a heart attack.

If not for my wife, I would have gone back to my hotel room to lay down and wait for it to pass. I would have maybe fallen asleep and never woken up again. If not for her quick thinking, and the fact that we were 4 minutes away from the ER, I would not be writing this now. Over the next couple of days, I received a few stents to unblock my coronary arteries and I was sent home with a prescription for a bunch of medications.

Pretty much immediately after coming back home I went to a VERY dark place. I’ve turned into an extremely emotional person, and my mood can swing on a dime. Out of nowhere, with no discernible rhyme or reason, I’d just start sobbing uncontrollably. I’d talk to someone about what had happened, and I would have to excuse myself to not break down. I’ve discovered that I have wonderful friends who have supported me, which in itself is something that makes me emotional just thinking about it. Accepting that I have a heart disease is one of the most difficult things I’ve had to do in my life.

Now that I am writing about it, I feel like writing the whole story down, but I also realize that it’s probably too much for a single post. What I do want to put down here is that I was SO lucky to get away with this. It has turned my whole world upside down, and I’ve made some big changes in my life. If nothing else, it has helped me be in the present more than dwelling on the past or fretting about the future. I’ve learned not to give a f*ck, well maybe much less of one because I’m still the same person who cares too damn much about pretty much everything.

I’ve been thinking about writing posts about health and wellness, because many of us in our industry are on a decidedly unhealthy life style. I see so many people who I know could be next. I talk about the changes that I’ve made to anyone who wants to hear about it, and because it’s considered to be rude to confront someone’s eating habits directly to them (also a lesson learned this year) I feel like maybe sharing this here could be a good thing. Let me know in the comments what you think about it.

The oppressive terror that I’ve felt for the better part of this past year has subsided, and replaced with a more manageable sense of doom. The way this is going I may end up actually overcoming my fear altogether, which probably has its upside and also its downside. It would be nice not to be afraid, but if I’m not afraid I don’t know if I’ll be able to maintain my healthy lifestyle.

So, on the 1st year anniversary of my heart attack, I just want to say I am super happy that I made it! A whole year! On to the next half of my life!

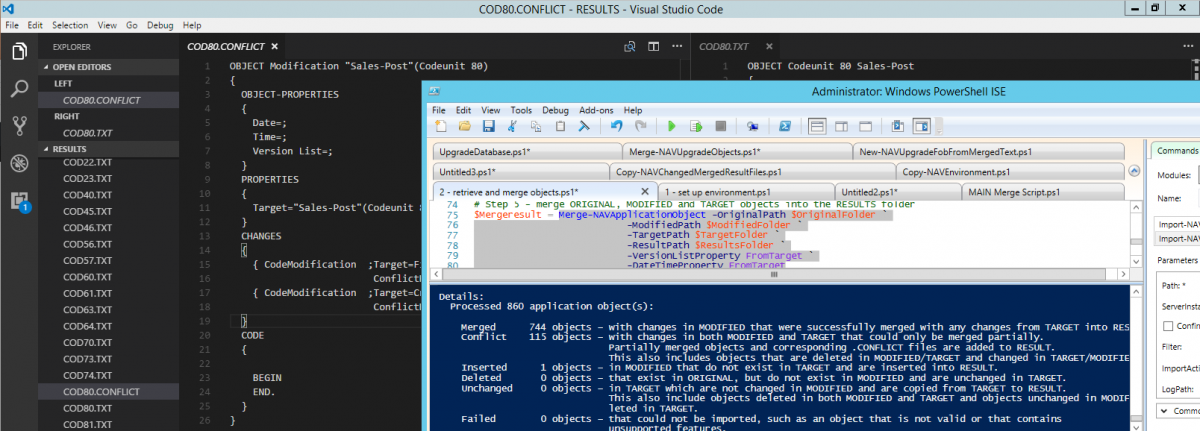

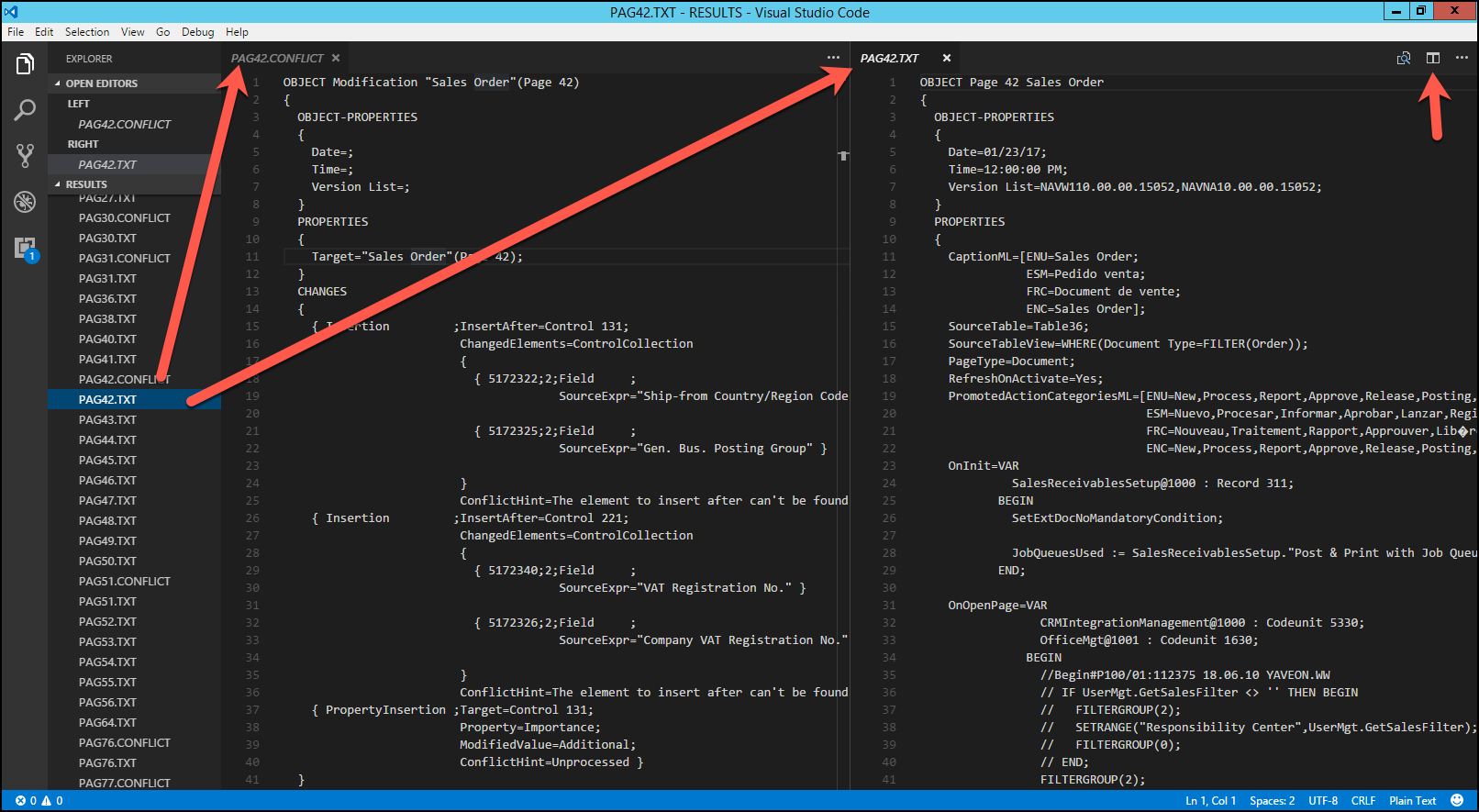

With VSCode installed, you can open the RESULT folder and see the content of this folder inside the file browser at the left hand side. In the upper right hand corner is a button that you can use to split the screen into two (and even three) editor windows. This is very useful for resolving merge conflicts, because you can open the conflict file in one side, and the object file in the other side. You are editing the actual object file here, so you may want to take a backup copy of the folder before you get started.

With VSCode installed, you can open the RESULT folder and see the content of this folder inside the file browser at the left hand side. In the upper right hand corner is a button that you can use to split the screen into two (and even three) editor windows. This is very useful for resolving merge conflicts, because you can open the conflict file in one side, and the object file in the other side. You are editing the actual object file here, so you may want to take a backup copy of the folder before you get started.